Computational Performance¶

Ultimately, how fast a backtest executes is a function of several factors: the number and complexity of technical indicators computed in boatwright.Strategy.calc_signals(), the length of the dataframe, and the number of orders that are generated and processed by the broker throughout boatwright.Backtest.run(), and of course the user’s hardware. In this testing the MACD, i.e. the simplest strategy, is backtested using a 2019 MacBook Pro (Processor: 2 Ghz Quad-Core Intel i5 cores, Memory: 16 GB 3733 MHz LPDDR4X)

First, timing a single backtest indicates that the ‘bottle-neck’ of backtests is not the calculation of the technical indicators, but the backtest process

data length: 437356

time calc_signals(): 0.89 s

time backtest(): 185.92 s

total_time: 186.81 s

This is anticipated as the technical indicators in the MACD strategy are moving averages computed with pandas rolling windows, and so leverages the efficiency of the pandas data processing library which is highly optimized for performance with critical code paths written in Cython or C. The backtest process is written in python as a loop iterating through each row of the dataframe, which is necessary but comparably less efficient.

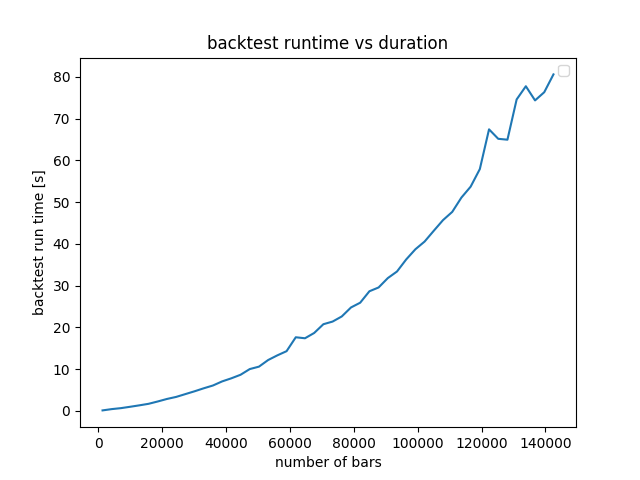

Next, backtest runtime is explored as a function of ‘number of bars’ or length of input dataframe revealing an exponential relationship between the length of the backtest and runtime. 100,000 bars of data, which runs in ~40s, is a 69.44 day long backtest for ‘MINUTE’ granularity, and 11.42 years for ‘HOUR’ granularity, and 2.74 centries for ‘DAY’ granularity.

Python source code: ../../examples/performance_testing/backtest_vs_nbars.py

import boatwright

from MACD import MACD

from datetime import datetime, timedelta

import matplotlib.pyplot as plt

import numpy as np

broker = boatwright.Brokers.BacktestBroker(taker_fee=0, maker_fee=0, slippage=0, quote_symbol="USD")

strategy = MACD(fast_period=7, slow_period=14, symbol="BTC", broker=broker)

database = boatwright.Data.CSVdatabase(source="COINBASE")

backtests = []

end = datetime(year=2023, month=11, day=10, hour=1, minute=0)

days = np.arange(1,101,2)

for d in days:

start = end - timedelta(days=int(d))

data = database.load(symbol=strategy.symbol, start=start, end=end, prerequisite_data_length=strategy.calc_prerequisite_data_length(), granularity=1, granularity_unit="MINUTE")

backtest = boatwright.Backtest(strategy=strategy, data=data)

backtests.append(backtest)

executor = boatwright.BacktestExecutors.SequentialExecutor()

# executor = boatwright.BacktestExecutors.ParallelExecutor(n_procs=4)

backtests = executor.run(backtests, verbose=True)

n_bars = np.array([len(b.data) for b in backtests])

runtimes = np.array([b.runtime for b in backtests])

fig, ax = plt.subplots()

ax.set_title("backtest runtime vs duration")

ax.set_xlabel("number of bars")

ax.set_ylabel("backtest run time [s]")

ax.plot(n_bars, runtimes)

ax.legend(loc="best")

plt.show()

Parrallel processing of backtests is also supported. The analysis below executes a parameter scan, composed of 23 backtests, first sequentially, and then in parallel with 3 cores, demonstrating a jump in efficiency.

number of backtests: 23

length of backtest: 44331.0

sequential runtime: 98.0715708732605 s

parallel runtime: 32.0289888381958 s

Python source code: ../../examples/performance_testing/sequential_vs_parallel.py

import boatwright

from boatwright.Optimizations import generate_parameter_combinations, ParameterScan

from boatwright.PerformanceMetrics import PercentProfit

from boatwright.BacktestExecutors import ParallelExecutor, SequentialExecutor

from MACD import MACD

from datetime import datetime

import time

if __name__ == '__main__':

br = boatwright.Brokers.BacktestBroker(taker_fee=0.4, maker_fee=0.6, slippage=0, quote_symbol="USD")

s = MACD(fast_period=1, slow_period=2, broker=br, symbol="BTC")

start = datetime(year=2023, month=10, day=1, hour=0, minute=0)

end = datetime(year=2023, month=11, day=1, hour=0, minute=0)

db = boatwright.Data.CSVdatabase(source="COINBASE")

data = db.load(symbol=s.symbol, start=start, end=end, granularity=1, granularity_unit="MINUTE")

scan = {

"fast_period": [25,50,75,100,125],

"slow_period": [100,150,200,250,300]

}

parameters_sets = generate_parameter_combinations(scan)

parameters_sets = [p for p in parameters_sets if (p["fast_period"] < p["slow_period"])]

loss_function = lambda b: -PercentProfit().calculate(b)

param_scan_seq = ParameterScan(strategy=s, loss_function=loss_function, scan=parameters_sets, data=data, executor=SequentialExecutor())

param_scan_par = ParameterScan(strategy=s, loss_function=loss_function, scan=parameters_sets, data=data, executor=ParallelExecutor(n_procs=3))

seq_start = time.time()

param_scan_seq.make_backtests()

optimal_strategy = param_scan_seq.run(verbose=True)

seq_runtime = time.time() - seq_start

par_start = time.time()

param_scan_par.make_backtests()

optimal_strategy = param_scan_par.run(verbose=True)

par_runtime = time.time() - par_start

backtest_lengths = sum([len(bt.data) for bt in param_scan_par.backtests]) / len(param_scan_par.backtests)

print(f"number of backtests: {len(parameters_sets)}")

print(f"length of backtest: {backtest_lengths}")

print(f"sequential runtime: {seq_runtime} s")

print(f"parallel runtime: {par_runtime} s")